Custom CA certificate support, ONVIF request timeout configurability, Fixes for saved clips getting corrupted

New

- Support for custom CA certificates in containers via volume mounting to enable operation in corporate networks with SSL interception. New gateway installer commands:

set-certs,clear-certs,show-certs - Add onvif_request_timeout_secs gateway config parameter to configure ONVIF request timeout via Console and API. Head to Console -> Gateway -> Settings tab to configure it for slow cameras if needed.

Fixed

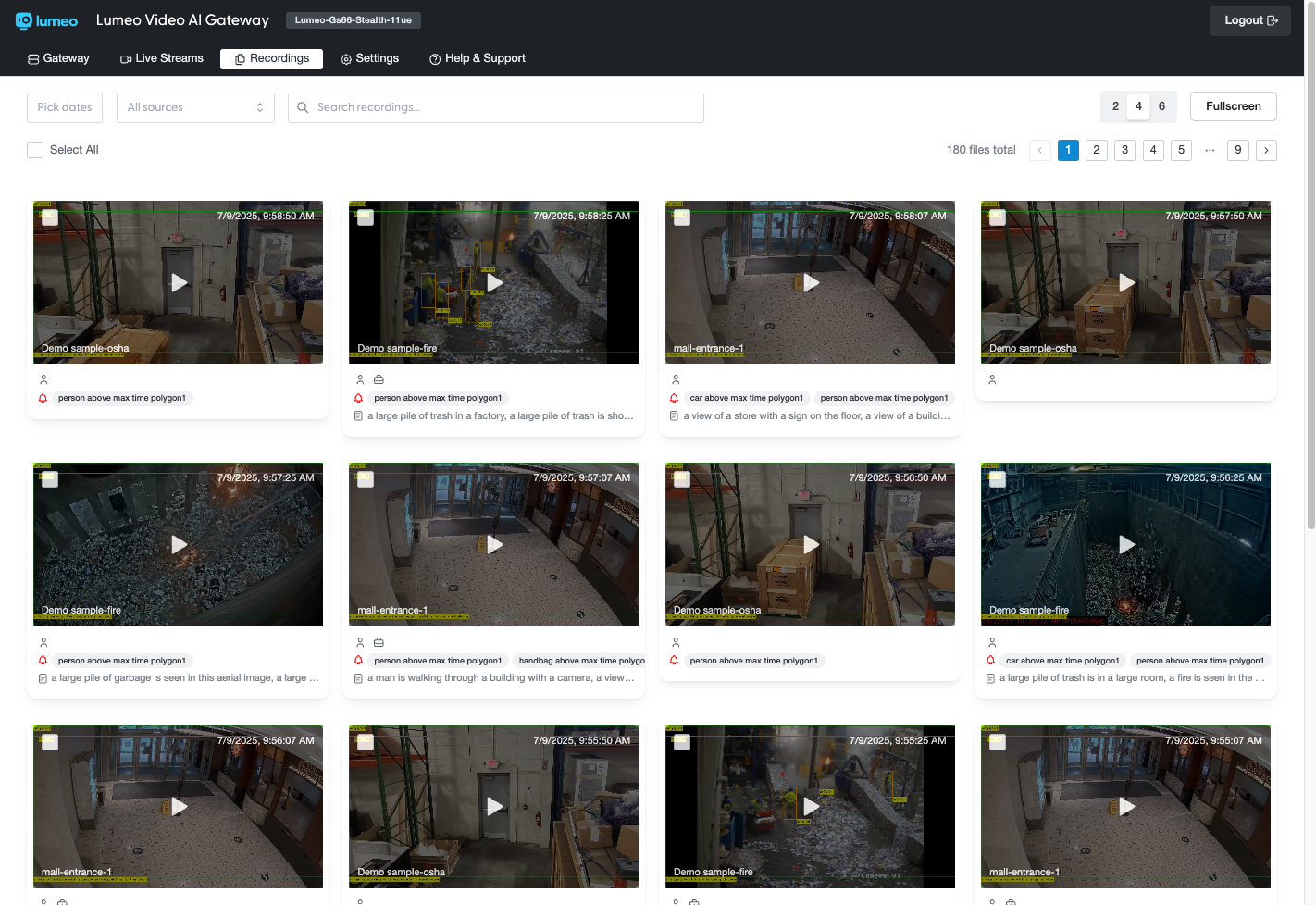

- Fixed bug where the first clip in a deployment run is always corrupted.

- Don't restart file-based deployments after runner crash