Save Clip

Save a clip and associated metadata when a specific condition is met

Overview

The node saves clips in mp4 format, along with the Pipeline metadata contained in those frames, as a files API object.

Files created by this node are also listed in the Console under the Deployment detail page when the save location is Lumeo cloud.

Format of output

This node creates 2 files for every clip it saves:

-

Media file (MP4 format), named

<node_name>-YYYY-MM-DDThhmmss.usZ.mp4. Ex.clip1-2023-01-20T083909.952Z.mp4. The timestamp in the file name is the creation timestamp. This file contains the media for the clip in mp4 format. -

Metadata file (JSON format) named

<node_name>-YYYY-MM-DDThhmmss.usZ.mp4.json. Exclip1-2023-01-20T083909.952Z.mp4.json. This file contains the metadata for all the frames contained in the media file in JSON format.

Inputs & Outputs

- Inputs : 1, Media Format : Encoded Video

- Outputs : 1, Media Format: Encoded Video

- Output Metadata : None

Properties

| Property | Value |

|---|---|

max_duration | Maximum duration for the clip, in seconds. Once this duration is reached, a new clip will be started. |

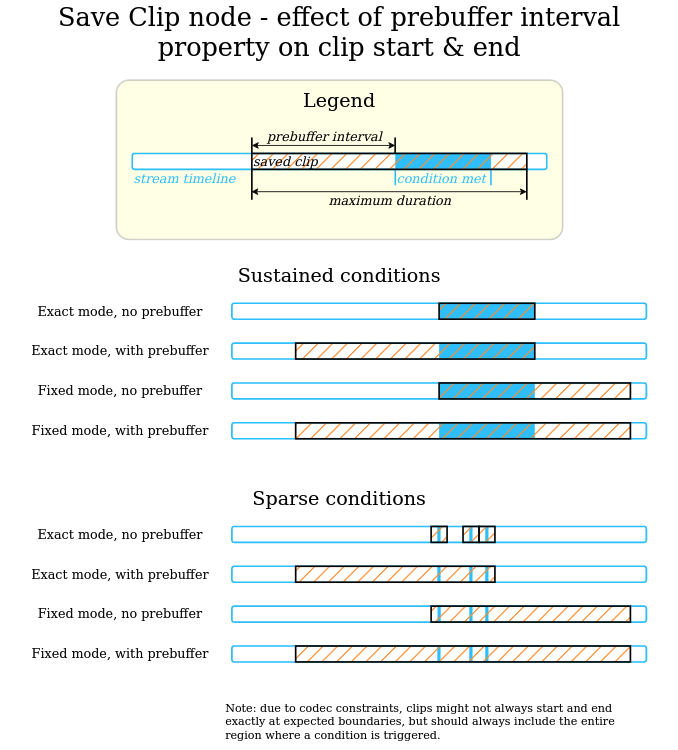

prebuffer_interval | Duration, in seconds, of video to record before the trigger condition is met. Note: See section below on how Pre-buffer works depending on the trigger_mode property. |

max_size | Maximum size of a clip in bytes. Once this size is reached, a new clip will be started. |

location | Where to save clips & associated metadata. Options : local : Save to local disk, in the folder specified under path property. lumeo_cloud : Upload to Lumeo's cloud service. For this location, Lumeo will always create a files object for each clip. s3 : Upload to a custom S3 bucket. |

path | Path to save the clips, if location == local. If you change the defaults, ensure that the path is writable by lumeod user. If location is set to lumeo_cloud clips are temporarily stored at /var/lib/lumeo/clip_upload (till they are uploaded). |

max_edge_files | Maximum number of files to keep on local disk, when location == local, for this specific node. Ignored when location == lumeo_cloud. If this property is not set, Lumeo will continue to save till the disk fills up. If it is set, Lumeo will save to local disk in a round robin manner (overwrite the 1st file once max_edge_files have been written). |

trigger | Start recording a clip once the trigger condition is met. The trigger expression must be a valid Dot-notation expression that operates on Pipeline Metadata and evaluates to True or False. See details here. ex. nodes.annotate_line_counter1.lines.line1.total_objects_crossed_delta > 0 |

trigger_mode | Exact : Record for as long as the trigger condition is met, but only up to the defined maximum duration. Fixed Duration : Record for the defined maximum duration once the trigger condition is met. |

s3_endpoint | AWS S3-compatible endpoint to upload to, excluding bucket name. Used when location == s3. ex. https://storage.googleapis.com or https://fra1.digitaloceanspaces.com or https://s3.us-east1.amazonaws.com |

s3_region | AWS S3-compatible endpoint Region. Used when location == s3. ex. us or fra1 |

s3_bucket | AWS S3-compatible bucket name. Used when location == s3. ex. lumeo-data |

s3_key_prefix | Folder prefix within the AWS S3-compatible bucket that files will be created in. Skip the trailing /. Used when location == s3. You can use dynamic keyword replacements by enclosing keywords in double curly braces {{keyword}}. Available keywords: application_id, application_name, deployment_id, deployment_name, gateway_id, gateway_name, source_id, year, month, day, hour, min, sec. ex. production/test or media/{{deployment_id}}/{{year}}-{{month}}-{{day}}/clips |

s3_access_key_id | S3 API Key ID with write access to the specified bucket. Used when location == s3. ex. GOOGVMQE4RCI3Z2CYI4HSFHJ |

s3_secret_access_key | S3 API Key with write access to the specified bucket. |

Pre-buffer Interval

This diagram below explains how the pre-buffer interval changes how the video clip is recorded.

Metadata

| Metadata Property | Description |

|---|---|

| None | None |

Common Provider Configurations

Examples

| Property | AWS | Digital Ocean | Wasabi | GCP |

|---|---|---|---|---|

s3_endpoint | https://s3.us-east-1.amazonaws.com | https://nyc3.digitaloceanspaces.com | https://s3.wasabisys.com | https://storage.googleapis.com |

s3_region | us-east-1 | nyc3 | us-east-1 | us-central1 |

s3_bucket | my-aws-bucket | my-do-bucket | my-wasabi-bucket | my-google-bucket |

s3_key_prefix | my-folder | my-folder | my-folder | my-folder |

s3_access_key_id | AKIAIOSFODNN7EXAMPLE | DO00QWERTYUIOPASDFGHJKLZXCVBNM | WASABI00QWERTYUIOPASDFGHJKLZXCVBNM | GOOG00QWERTYUIOPASDFGHJKLZXCVBNM |

s3_secret_access_key | xxxxx | xxxxx | xxxxxx | xxxxxxx |

AWS Credentials

Create user

aws iam create-user --user-name <username>

Attach policy

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": "arn:aws:s3:::<bucket-name>/*"

},

{

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::<bucket-name>"

}

]

}aws iam put-user-policy --user-name <username> --policy-name S3PutObjectPolicy --policy-document file://s3-put-policy.json

Create long lived access key for user and grab the credentials

aws iam create-access-key --user-name <username>

Updated 4 months ago