AI Models

AI Models allow you to extract information from video such as detecting people, vehicles, identifying if a person is wearing a mask etc.

Overview

AI Models are used in Pipelines to infer on video, and the inference output can be visualized on the stream or sent to your application using other Pipeline Nodes.

Lumeo provides you with a set of ready-to-use AI Models for common use cases, or you can upload your own models from a variety of popular formats (TensorFlow, PyTorch, ONNX, etc.) and use them within a Pipeline.

Lumeo can also import and use object detection metadata from AI models running on certain cameras.

Camera AI Models

See Camera AI Models guide for details on how to enable and import inference results from AI models running on a AI-enabled Camera.

Lumeo Gateway AI Models

The following section refers to AI Models that you can run on a Lumeo Gateway. These models are compatible with any video stream from any source.

Using a Model

You can run an AI Model (BYO or one from the Analytics Library) using the AI Model Node in the Pipeline. To do so, add the Model Inference Node and select the model you'd like to use from Node properties.

The Model inference node adds inference output (detected objects, labels, etc.) for supported (ie non-Custom) model architectures & capabilities to the Pipeline metadata. This can be displayed on the video stream using the Display Stream Info Node and accessed within the Function Node for processing.

For Custom model architectures and capabilities, Lumeo does not extract any metadata, but adds raw inference output tensors to the Pipeline metadata which you can extract and parse using the Function Node.

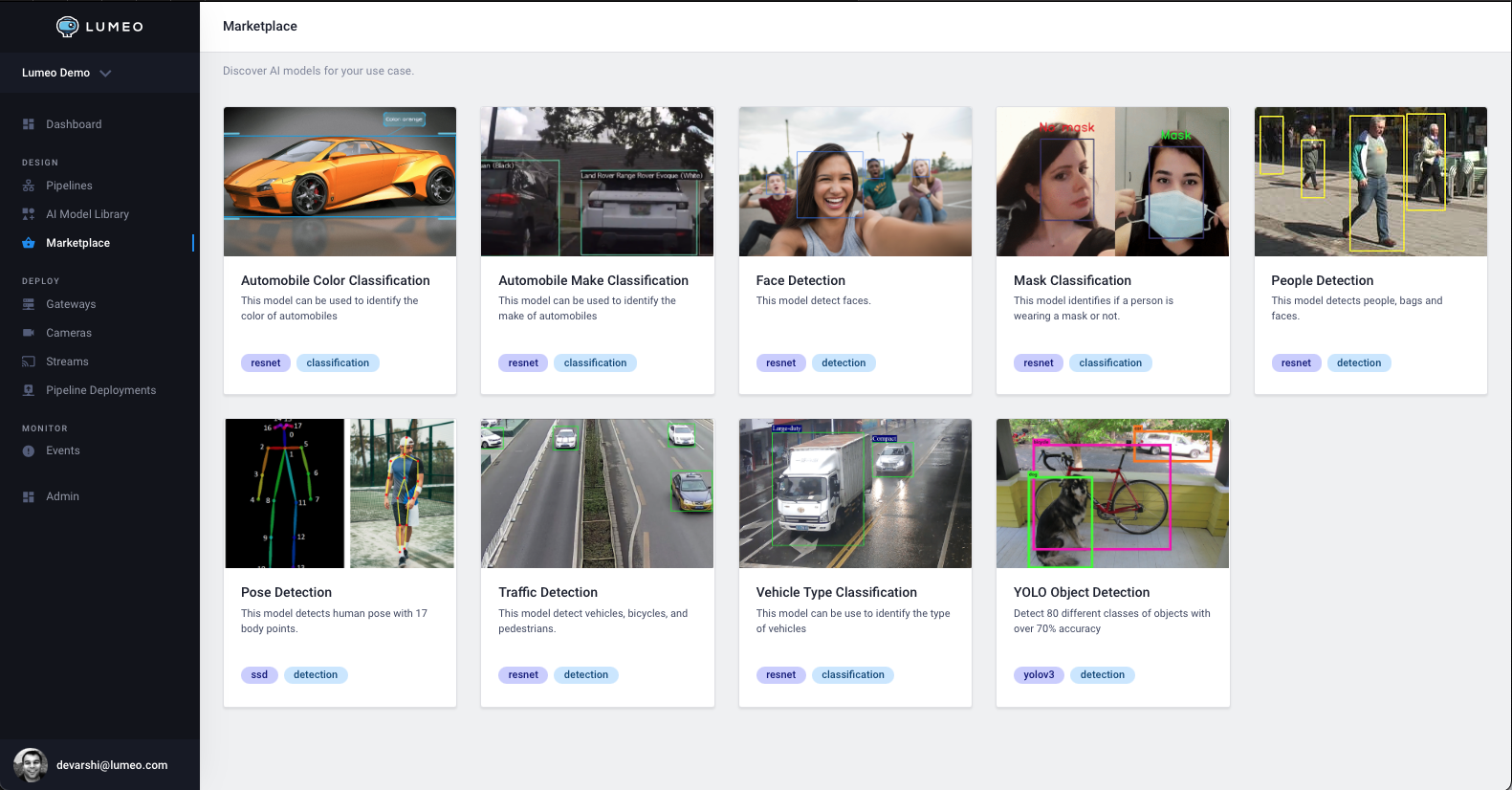

Ready-to-use Models

The Ready-to-use Models section in the Analytics Library lists a set of curated models that you can use within your Pipelines right away. Lumeo manages these models and keeps your Solution up to date with new versions of these models whenever they are updated.

A set of curated models available in the Marketplace

Generative AI Models

See the following Generative AI (Large vision model) nodes to incorporate LVMs into your analytic pipelines:

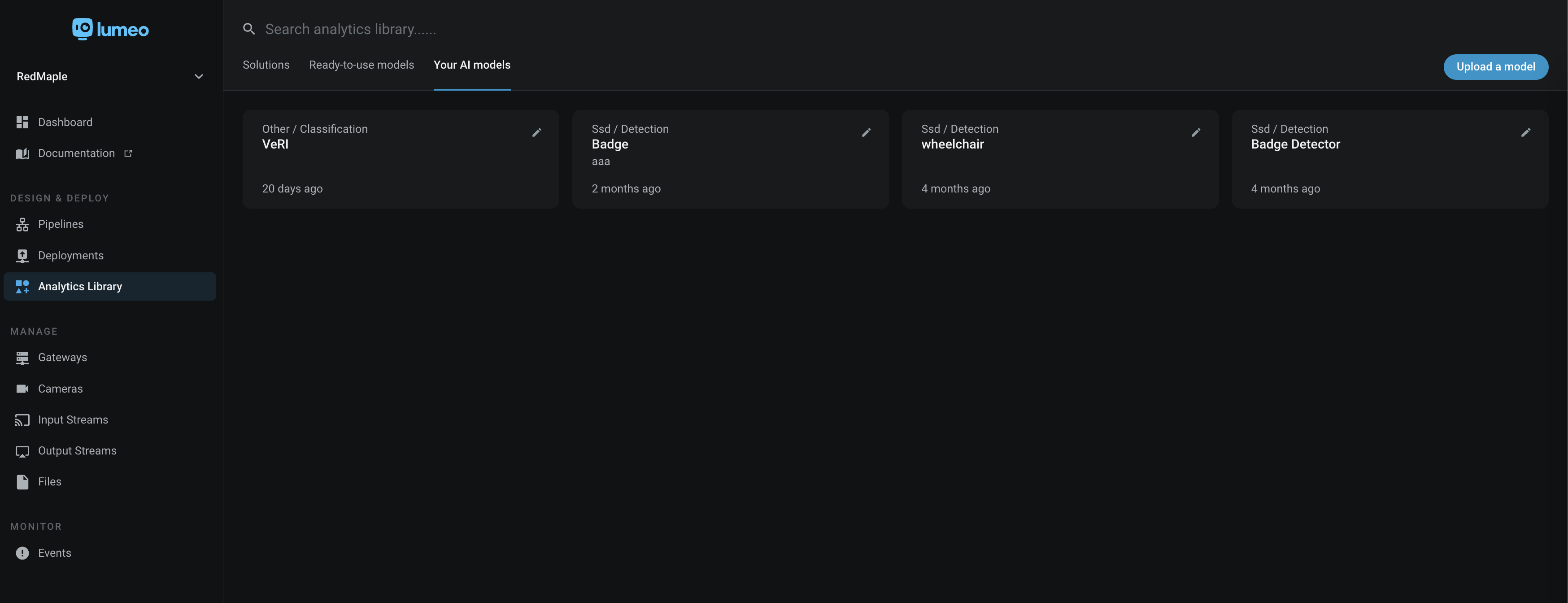

Bring-your-own (BYO) AI Models

Lumeo allows you to easily upload and use your own / custom models within Pipelines. Lumeo keeps your Solution up to date with new versions of these models whenever you update them in the Console. To upload your own model, head to Analytics Library -> Your AI Models section and click Upload a Model.

Upload Custom models in the Analytics Library

BYO Model Types

Easily upload your own vision model and use it in a Pipeline

Lumeo supports the following capabilities, architectures and formats of models :

Format

Format refers to how models are represented & stored, often tied to frameworks used to generate them. Lumeo supports the following model formats:

- ONNX (exported from PyTorch, Tensorflow, etc)

- ONNX Lumeo YOLO

- YOLO Native (Darknet)

In the Model Conversion guide you can find instructions how to convert the most popular formats to a format that works with Lumeo. If you have a custom model you'd like to use with Lumeo, please contact us and we will get it working with you.

Capability

Capability defines the nature of inputs, outputs and what the model does. Lumeo supports models with the following capabilities:

- Detection : Given an image, detects specific objects in the image & outputs bounding boxes + detection probabilities.

- Classification : Given an image, outputs a list of categories with probabilities.

- Other : For models with "Custom" capabilities, Lumeo will run the model but does not attempt to parse the result to extract an object or a category. You will need to write a custom parser using the Function Node to process the result and extract any relevant information.

Architecture

Architecture or Topology refers to the structure of the model (# of layers, operations, interconnects, inputs, outputs, output formats, etc.). Lumeo supports the following architectures :

- Mobilenet

- Resnet

- Azure Custom Vision: SSD & Yolo

- D-FINE

- YoloV3, YoloV3 Tiny, YoloV4, YoloV4 Tiny, YoloV5, YoloV6, YoloV7, YoloV7 Tiny, YoloV8, YoloV9, YoloV10, YoloV11, YoloV12

- Yolo-Nas, Yolo-R, Yolo-X, PP-YoloE

- SSD

- Other : For models with "Custom" architecture, Lumeo will run the model but does not attempt to parse the result to extract an object or a category. You will need to write a custom parser using the Function Node to process the result and extract any relevant information. See Guide here : Custom Model Parser

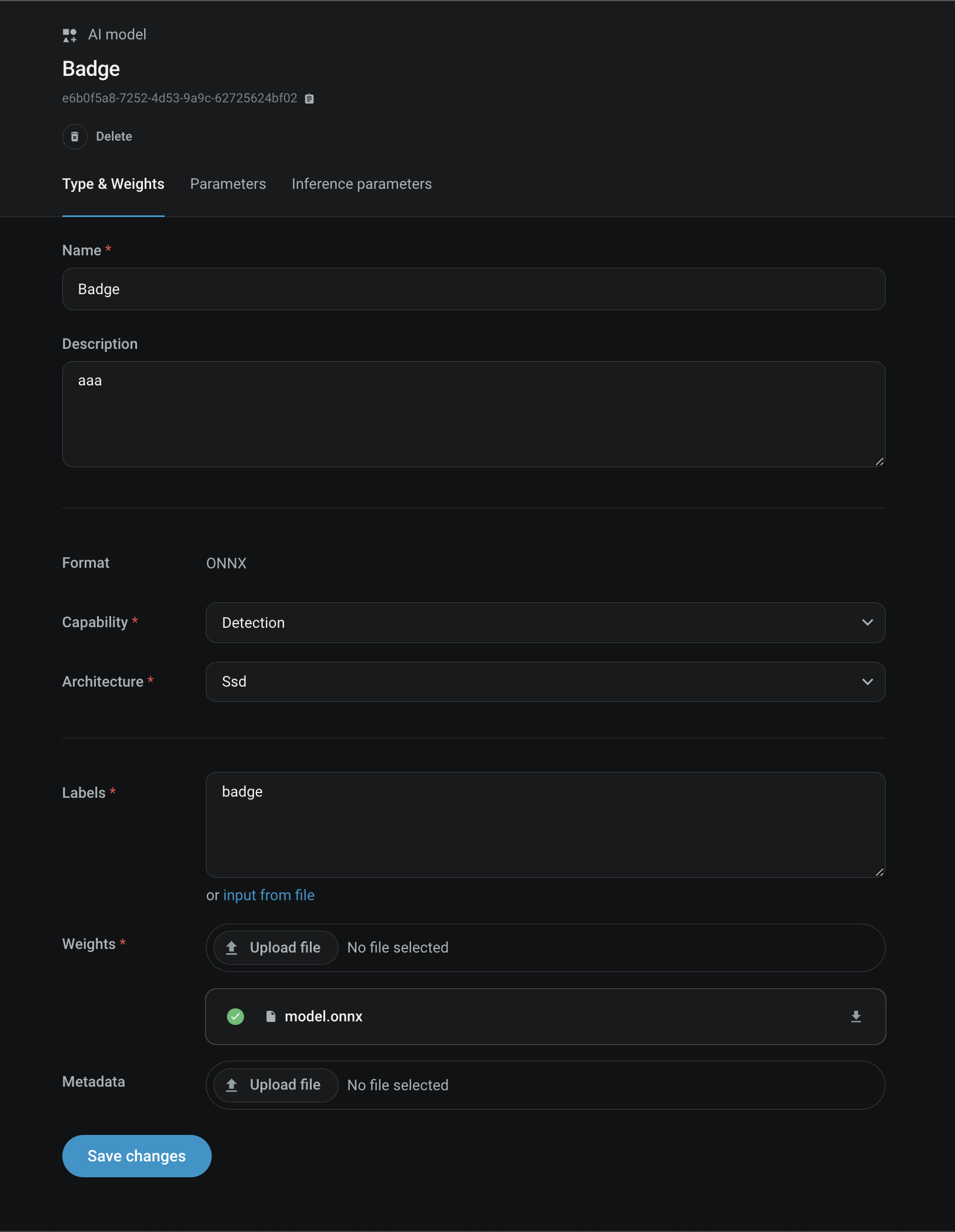

Weights & Metadata Files

Model Format | Weights File | Metadata File |

|---|---|---|

ONNX |

| None |

ONNX Lumeo YOLO |

| None |

YOLO Native (Darknet) |

| Yolo Config file. Must be renamed in this format:

Ex. |

Import Guide

Importing your ModelLumeo's ability to parse your model's outputs into objects or class attributes is conditional upon the model's output layers matching the format expected by Lumeo. See the Model Conversion guide for more information on expected model output layers for each Architecture. You can verify if your model output layers match these using Netron.

If your model's output layers don't match the ones built-in to Lumeo and there is no conversion guide that works, you can choose to write a Custom Model Parser.

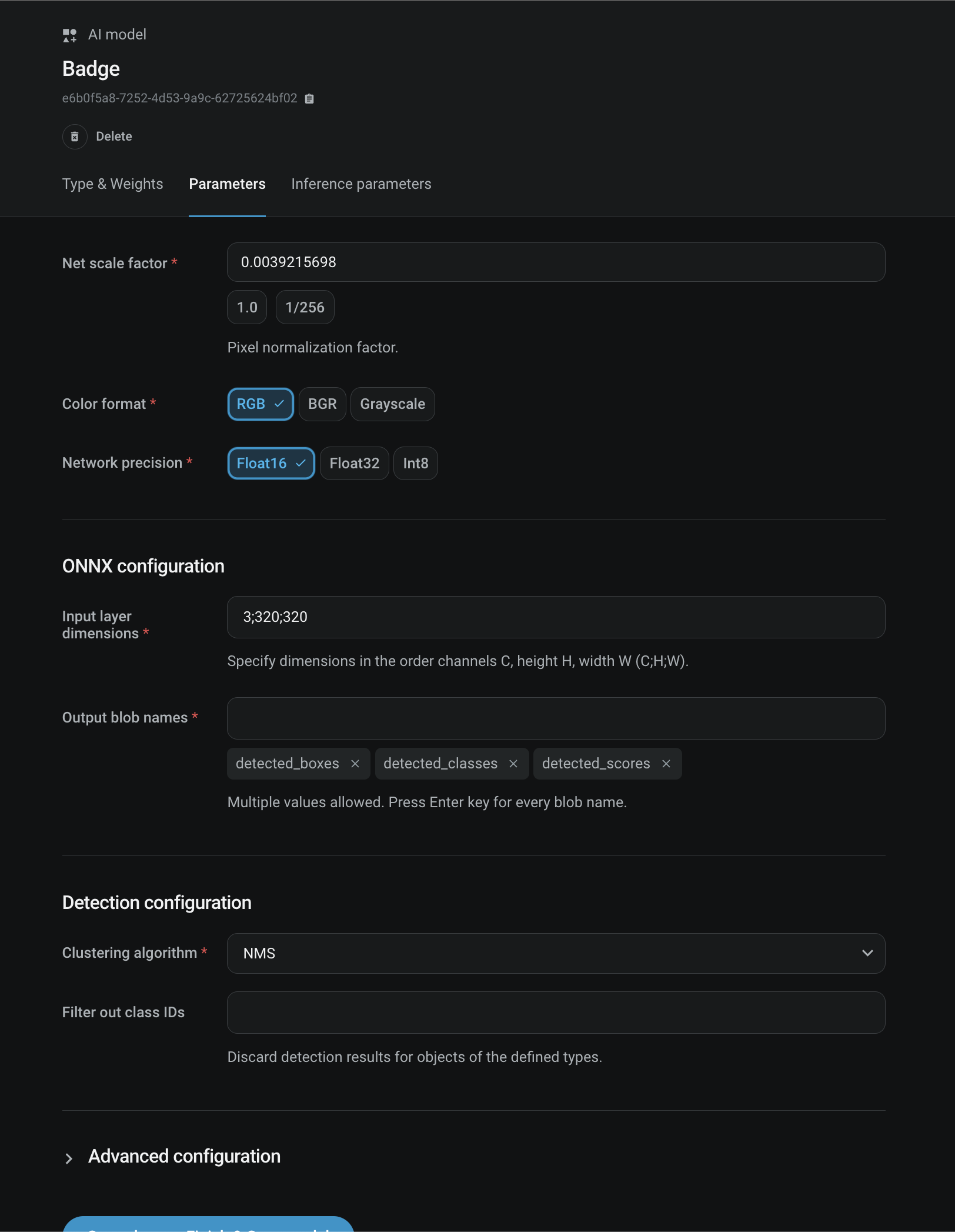

Parameters

This section contains Format or Architecture specific parameters needed to run the Model.

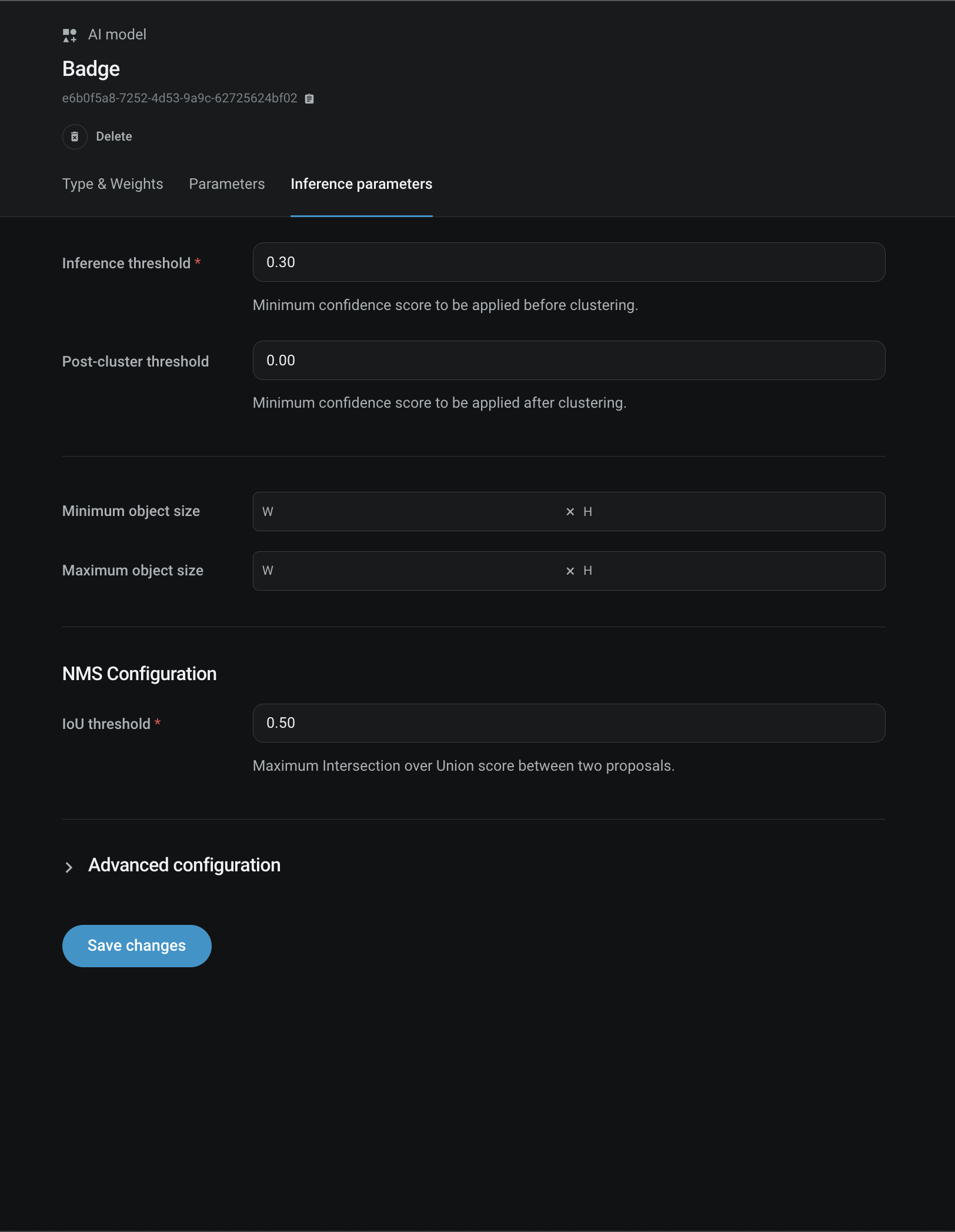

Inference Parameters

This section contains additional post-processing parameters.

API Reference

See models

Updated 3 months ago