Publish to Elasticsearch

Publish metadata to Elasticsearch for reporting and dashboards.

Overview

This node is publishes pipeline metadata to Elasticsearch periodically or when a trigger condition is met. You can use it for reporting and dashboards.

Inputs & Outputs

- Inputs : 1, Media Format : Raw Video

- Outputs : 1, Media Format: Raw Video

- Output Metadata : None

Properties

| Property | Value |

|---|---|

trigger_type | When to Collect Metadata * custom_trigger :Collect metadata when a custom trigger is fired* auto_trigger :when significant node properties change (auto trigger),* periodic :at an interval (periodic)* scheduled :at fixed times past the hour (scheduled) |

trigger | If trigger_type is custom_trigger, the metadata is only collected when this trigger condition evaluates to True.The trigger expression must be a valid Dot-notation expression that operates on Pipeline Metadata and evaluates to True or False. See details here.ex. `nodes.annotate_line_counter1.lines.line1.total_objects_crossed_delta > 0 |

interval | Minimum time, in seconds, between consecutive publishing actions. 0 means publish every time it is collected. ex. 60 |

schedule_publishing | If set, ignores the Interval property and schedules publishing at a synchronized time. Options: Every Day Every Hour Every 30 minutes Every 15 minutes |

es_uri | URI for Elasticsearch Server. ex. https://lumeo1.elastic-cloud.com:9243ex. https://user:[email protected]:9243 |

es_verify_certs | If true, verifies Elasticsearch server certificate. Turn off to allow self-signed certificates (Warning: insecure). |

es_api_key | Base10 encoded API key for your Elasticsearch server. Leave empty if you specify credentials in the URI. ex. V2F2Zk1YNEJkS2lTUHFvc0aUQ== |

es_index | Name of the index to which metadata will be published. This index will be created if it does not exist already. ex. lumeometa |

es_max_retries | Number of times to retry publishing if connection to Elasticsearch server fails, before giving up and discarding the data. |

node_ids_to_publish | Publish metadata only for the specified Node IDs. If not specified, publishes metadata from all upstream nodes. Ex. annotate_line_counter1, annotate_queue1 |

snapshot_type | If not None, send snapshot as a base64 encoded jpeg to Elasticsearch. Warning: Significantly increases your ES storage consumption. Options: None (null) small : Max 320px widthmedium : Max 640px width |

use_stream_clock | If true, reports timestamps from the stream to Elasticsearch instead of using clock time. Enable to report correct time information when processing files, since they are typically processed faster than real time. ex. true / false |

Metadata

This node does not add any new metadata to the frame.

| Metadata Property | Description |

|---|---|

| None | None |

Elasticsearch Configuration

Viewing Snapshots in Kibana

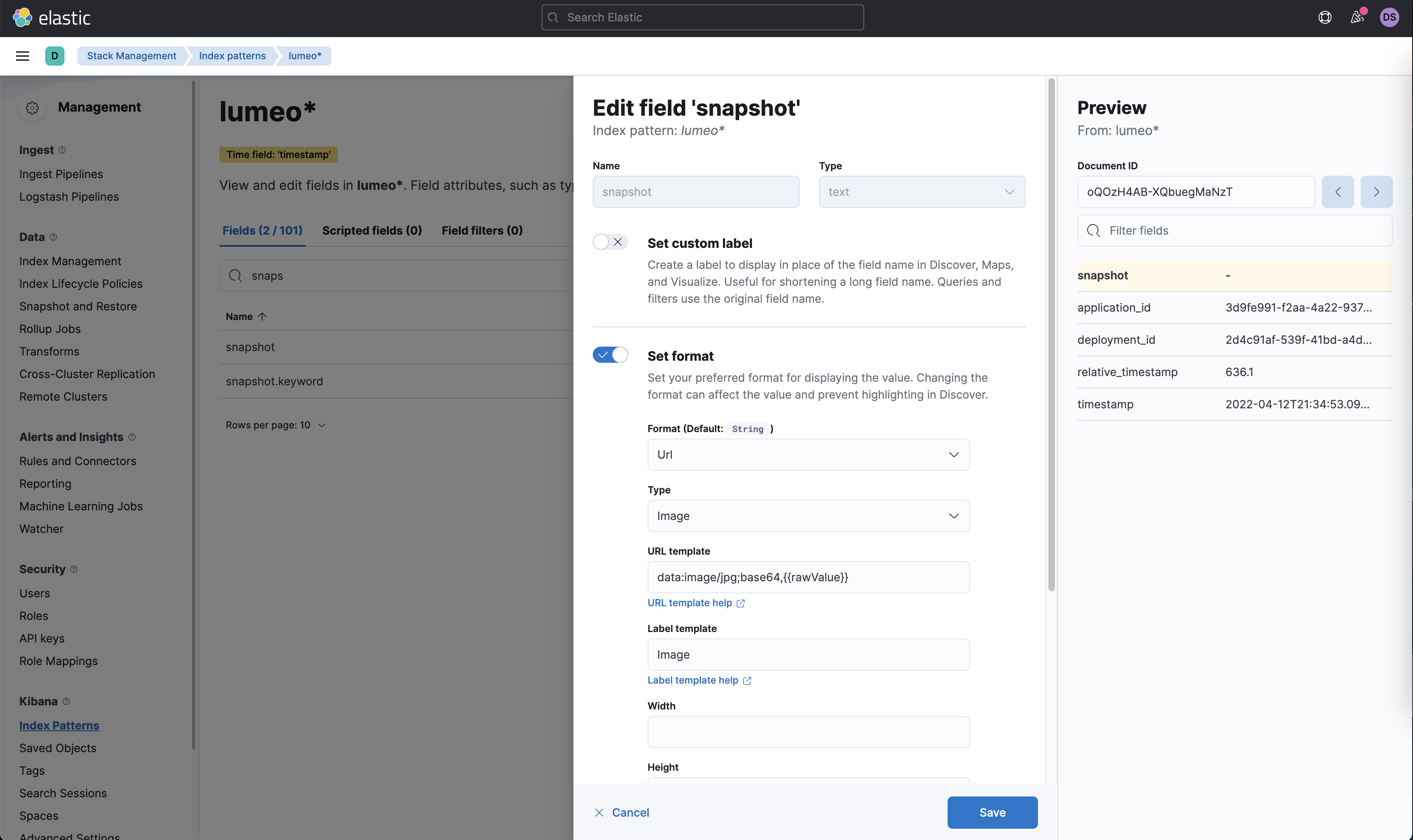

Head to Stack Management -> Index Patterns -> lumeo-<application_id> and search for snapshot field. This field will appear once atleast one shapshot has been sent to ES from Lumeo.

- Click "Edit Field" (pencil icon on the right end of the field name)

- Enable "Set Format" and configure it with the following settings:

- Format:

Url - Type:

Image - URL Template:

data:image/jpg;base64,{{rawValue}} - Label Template:

Image

- Format:

Setup a new Elastic offline instance

Follow this guide to setup a new single-node Elastic search + Kibana instance to use as an offline data warehouse with Lumeo.

Prerequisites for machine:

- Ubuntu 24

- Atleast 16GB RAM, 4 CPU cores (increase if larger data size)

- Atleast 128GB disk, more if you plan to store more data.

1. Adjust kernel settings on Linux

The vm.max_map_count kernel setting must be set to at least 262144

How you set vm.max_map_count depends on your platform. Open the ‘/etc/sysctl.conf’ file in a text editor with root privileges. You can use the following command

sudo nano /etc/sysctl.confNavigate to the end of the file or search for the line containing vm.max_map_count, If the line exists, modify it to set the desired value

vm.max_map_count=262144If the line doesn’t exist, add it at the end of the file. Save the file and exit the text editor. Apply the changes by running the following command

sudo sysctl -pThis command reloads the sysctl settings from the configuration file. Now, the value of vm.max_map_count should be updated to 262144.

2. Prepare Environment Variables

Create or navigate to an empty directory for the project.

Inside this directory, create a .env file and set up the necessary environment variables.

Copy the following content and paste it into the .env file.

# Password for the 'elastic' user (at least 6 characters, no special characters)

ELASTIC_PASSWORD=lumeovision

# Password for the 'kibana_system' user (at least 6 characters, no special characters)

KIBANA_PASSWORD=lumeovision

# Lumeo Elasticsearch Configuration. Deletes data after 180 days. Adjust retention duration as needed, and set to 0 to disable deletes.

LUMEO_INDEX_PREFIX=lumeo

LUMEO_ILM_DELETE_DAYS=180

# Version of Elastic products

STACK_VERSION=9.2.4

# Set the cluster name

CLUSTER_NAME=docker-cluster

# Set to 'basic' or 'trial' to automatically start the 30-day trial of advanced features

LICENSE=basic

# Port to expose Elasticsearch HTTP API to the host

ES_PORT=9200

# Port to expose Kibana to the host

KIBANA_PORT=443

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=2147483648In the .env file, specify a password for the ELASTIC_PASSWORD and KIBANA_PASSWORD variables.

The passwords must be alphanumeric and can’t contain special characters, such as ! or @. The bash script included in the compose.yml file only works with alphanumeric characters.

3. Create Docker Compose Configuration

Now, create a compose.yml file in the same directory and copy the following content and paste it into the compose.yml file.

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- ./api-keys:/usr/share/elasticsearch/api-keys

user: "0"

command: >

bash -c '

if [ x$${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x$${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:$${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"$${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "Setting up Lumeo indices and ILM policies";

INDEX_PREFIX="${LUMEO_INDEX_PREFIX:-lumeo}";

ILM_DELETE_DAYS="${LUMEO_ILM_DELETE_DAYS:-30}";

if [ "$$ILM_DELETE_DAYS" -gt 0 ]; then

echo "Creating ILM policy: $${INDEX_PREFIX}-ilm-policy";

curl -s -X PUT --cacert config/certs/ca/ca.crt \

-u "elastic:$${ELASTIC_PASSWORD}" \

-H "Content-Type: application/json" \

https://es01:9200/_ilm/policy/$${INDEX_PREFIX}-ilm-policy \

-d "{

\"policy\": {

\"phases\": {

\"hot\": {

\"actions\": {

\"rollover\": {

\"max_age\": \"1d\"

}

}

},

\"delete\": {

\"min_age\": \"$${ILM_DELETE_DAYS}d\",

\"actions\": {

\"delete\": {}

}

}

}

}

}";

echo "Creating index template: $${INDEX_PREFIX}-template";

curl -s -X PUT --cacert config/certs/ca/ca.crt \

-u "elastic:$${ELASTIC_PASSWORD}" \

-H "Content-Type: application/json" \

https://es01:9200/_index_template/$${INDEX_PREFIX}-template \

-d "{

\"index_patterns\": [\"$${INDEX_PREFIX}-*\"],

\"template\": {

\"settings\": {

\"index.lifecycle.name\": \"$${INDEX_PREFIX}-ilm-policy\",

\"index.lifecycle.rollover_alias\": \"$${INDEX_PREFIX}\"

},

\"mappings\": {

\"properties\": {

\"snapshot\": {

\"type\": \"text\",

\"index\": false,

\"store\": false,

\"norms\": false,

\"fielddata\": false,

\"eager_global_ordinals\": false,

\"index_phrases\": false

}

}

}

}

}";

fi;

echo "Creating initial index: $${INDEX_PREFIX}-000001";

curl -s -X PUT --cacert config/certs/ca/ca.crt \

-u "elastic:$${ELASTIC_PASSWORD}" \

-H "Content-Type: application/json" \

https://es01:9200/$${INDEX_PREFIX}-000001 \

-d "{

\"aliases\": {

\"$${INDEX_PREFIX}\": {

\"is_write_index\": true

}

},

\"mappings\": {

\"properties\": {

\"snapshot\": {

\"type\": \"text\",

\"index\": false,

\"store\": false,

\"norms\": false,

\"fielddata\": false,

\"eager_global_ordinals\": false,

\"index_phrases\": false

}

}

}

}";

echo "Creating API key for $${INDEX_PREFIX}";

API_KEY_RESPONSE=$$(curl -s -X POST --cacert config/certs/ca/ca.crt \

-u "elastic:$${ELASTIC_PASSWORD}" \

-H "Content-Type: application/json" \

https://es01:9200/_security/api_key \

-d "{

\"name\": \"$${INDEX_PREFIX}-writer-key\",

\"role_descriptors\": {

\"$${INDEX_PREFIX}_writer\": {

\"cluster\": [\"monitor\"],

\"indices\": [{

\"names\": [\"$${INDEX_PREFIX}\", \"$${INDEX_PREFIX}-*\"],

\"privileges\": [\"write\", \"create_index\", \"auto_configure\", \"view_index_metadata\"]

}]

}

}

}");

echo "$$API_KEY_RESPONSE" | grep -q "\"id\"";

if [ $$? -eq 0 ]; then

API_KEY_ID=$$(echo "$$API_KEY_RESPONSE" | grep -o "\"id\":\"[^\"]*\"" | cut -d\" -f4);

API_KEY=$$(echo "$$API_KEY_RESPONSE" | grep -o "\"api_key\":\"[^\"]*\"" | cut -d\" -f4);

ENCODED=$$(echo "$$API_KEY_RESPONSE" | grep -o "\"encoded\":\"[^\"]*\"" | cut -d\" -f4);

echo "============================================================";

echo "API KEY CREATED";

echo "============================================================";

echo "ID: $$API_KEY_ID";

echo "API Key: $$API_KEY";

echo "Encoded: $$ENCODED";

echo "============================================================";

mkdir -p /usr/share/elasticsearch/api-keys;

echo "LUMEO_ES_API_KEY=$$ENCODED" > /usr/share/elasticsearch/api-keys/$${INDEX_PREFIX}-api-key.env;

echo "LUMEO_ES_INDEX=$$INDEX_PREFIX" >> /usr/share/elasticsearch/api-keys/$${INDEX_PREFIX}-api-key.env;

echo "";

echo "API key saved to: ./api-keys/$${INDEX_PREFIX}-api-key.env";

echo "Add this to your application environment:";

echo " LUMEO_ES_API_KEY=$$ENCODED";

echo " LUMEO_ES_INDEX=$$INDEX_PREFIX";

else

echo "Failed to create API key";

echo "$$API_KEY_RESPONSE";

fi;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

environment:

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- KIBANA_PASSWORD=${KIBANA_PASSWORD}

- LUMEO_INDEX_PREFIX=${LUMEO_INDEX_PREFIX:-lumeo}

- LUMEO_ILM_DELETE_DAYS=${LUMEO_ILM_DELETE_DAYS:-0}

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- discovery.type=single-node

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

- SERVER_PUBLICBASEURL=http://localhost:5601

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

volumes:

certs:

driver: local

esdata:

driver: local

kibanadata:

driver: local4. Start Docker Compose

Now you can start Elasticsearch and Kibana using Docker Compose. Run the following command from your project directory

docker compose up -dCheck status:

docker compose ps -a5. Retrieve Elastic API key

The init container will save API key for you to use in the Publish to Elasticsearch node settings.

This key can be found in api-keys/lumeo-api-key.env file after the init container finishes it's work (typically a few minutes)

6. Access Elasticsearch and Kibana

Once Docker Compose has started the services, you can access Elasticsearch at https://localhost:9200 and Kibana at https://localhost:443 in your web browser.

Log in to Kibana as the elastic user and the password is the one you set earlier in the .env file.

Updated 11 days ago