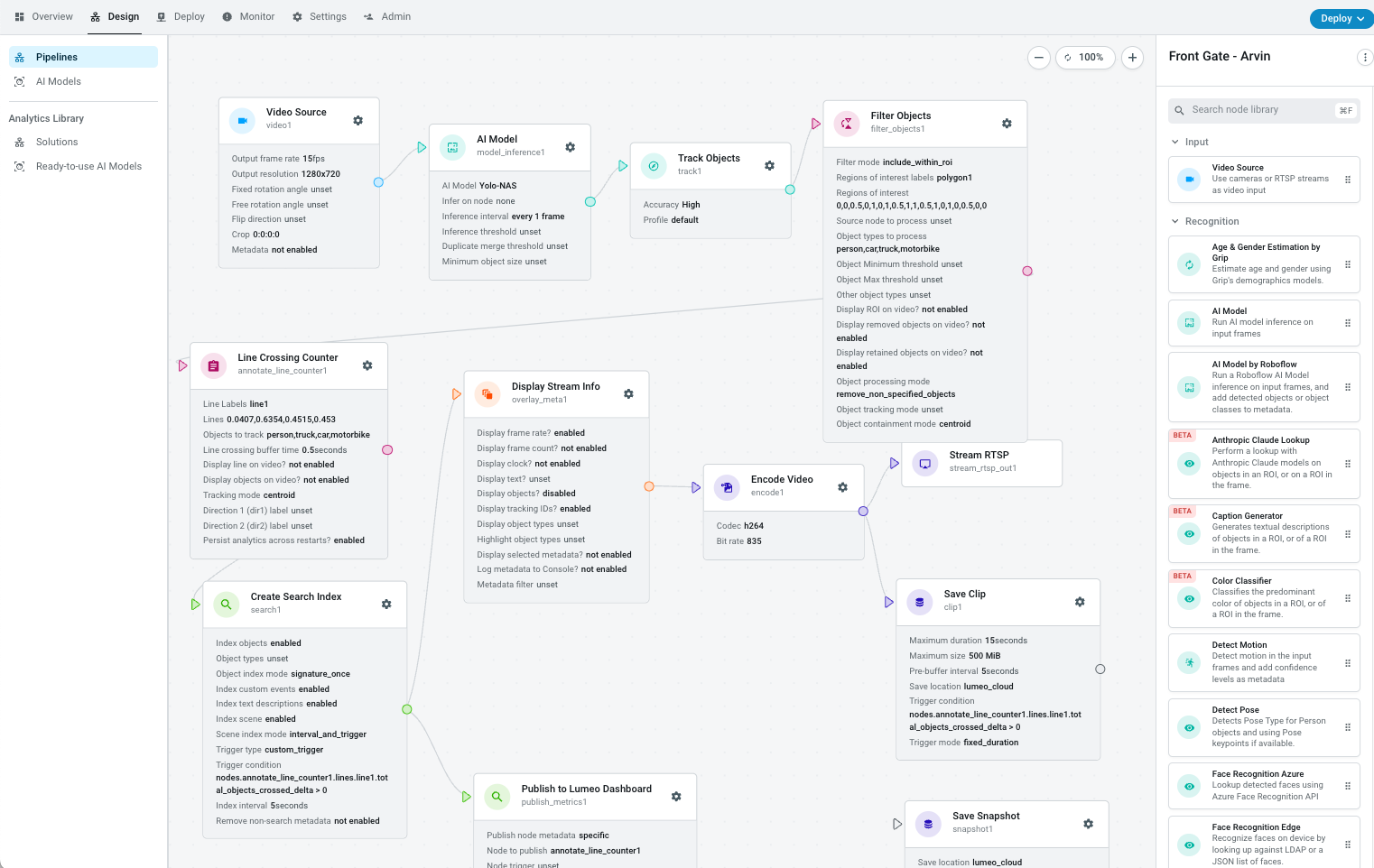

Workflow (aka Pipeline)

Workflows make it easy to process video, on the edge or in the cloud. It is a flexible, cloud-managed, video processing engine that can route, process, store and transform video and images.

Overview

Workflows (aka Pipelines) can be deployed to run on a variety of edge devices (aka Gateways) with support for accelerated inferencing using Nvidia GPUs and ML accelerators.

Workflow Editor

The Workflow Editor is a node-based editor that lets you build a video workflow quickly. You can drag and drop nodes from the node library to the workflow and link them up to create your workflow. For a list of Nodes and how they work, see Node Reference section.

Nodes

A Workflow is made up of one or more Nodes which can receive Video from Cameras & Streams, process it, and output files, Streams or metadata to your application.

Node Inputs & Outputs

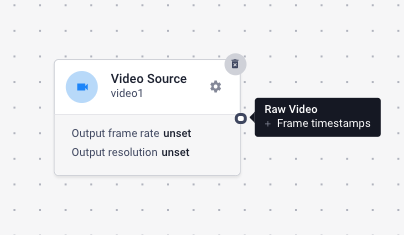

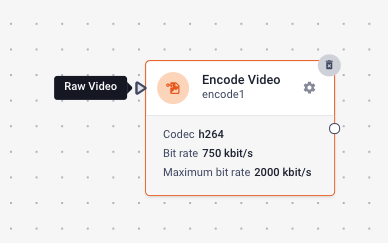

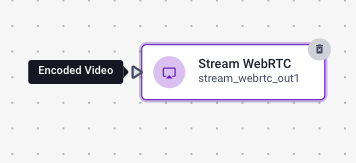

When you hover over the Input (triangle) and Output (circle) connectors of a Node on the canvas, you can see the type of media that node expects at the input and what it outputs. In order to connect 2 nodes, the output media type of one must match the input media type of the other. The editor will not let you connect 2 nodes if their media types are incompatible.

The editor will also display the metadata that a node outputs along with the media. You can, however, connect 2 nodes that have different metadata (metadata is cumulative - each node simply adds that metadata to each frame it processes).

Lumeo supports the following media types in the workflow:

Raw Video : These nodes output Raw video frames.

Encoded Video : These nodes output Encoded video frames.

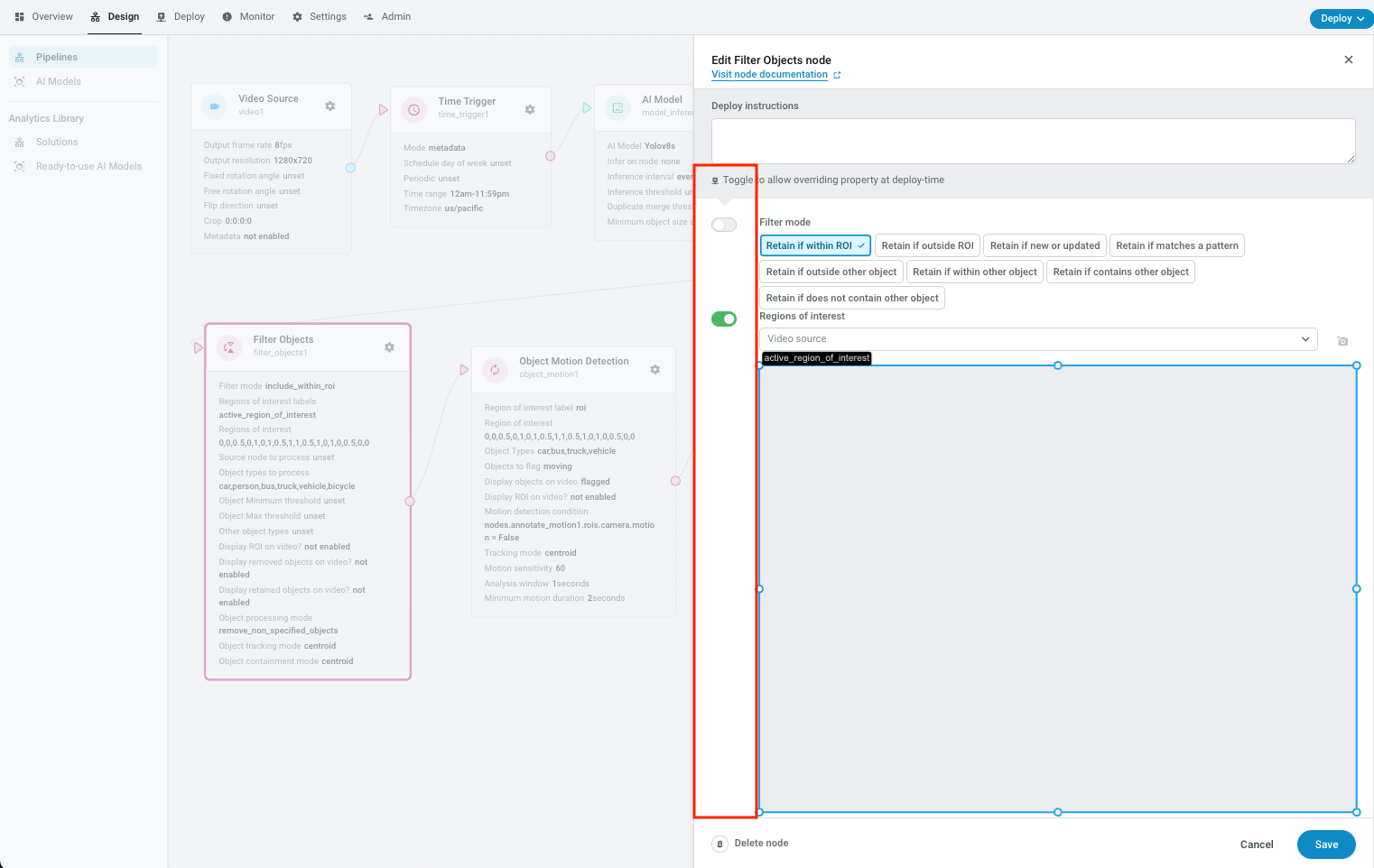

Node Properties

Some nodes have additional properties that you can configure by clicking on the gear icon on the node. Here's how the Node properties work:

- Properties specified here will apply to all the deployments for that Workflow.

- Properties that are empty but required will need to be specified when deploying the workflow.

- Properties specified here, but with the "Toggle to allow overriding property at deploy time" enabled will show up with these defaults but can be overridden when deploying the workflow.

- Video Source node is unique in that the actual source of the video can only be specified when you Deploy that workflow.

This allows you to create a "template" for the Workflow, and then customize it for each deployment, for instance, on a different device or with a different video source.

Node properties with "Toggle to allow overriding at deploy time" in red.

Node Metadata

Along with processing media, Workflow also supports frame-level metadata that is carried along with each frame.

Built-in Metadata

Some nodes (such as Add Metadata Node , AI Model Node , Line Counter Node etc. ) will inject metadata into the frames that they process.

Here is an example of metadata carried in a Workflow that counts objects present in a given region of interest ( utilizing a Video Source Node and Presence Counter Node ).

In order to determine metadata flowing through your workflow, refer to the documentation for each node that you are using.

{

"video1.source_type": "stream",

"video1.source_name": "Parking Lot test video",

"video1.source_id": "93077c84-b9ce-409f-966f-bdf2bbc7c242",

"nodes": {

"annotate_presence1": {

"type": "annotate_presence",

"rois": {

"roi1": {

"coords": [[1,1],

[100,100],

[200,200]],

"total_objects": 2,

"objects_entered_delta": 1,

"objects_exited_delta": 0,

"objects_above_max_time_threshold_count": 1,

"current_objects_count": 2,

"current_objects": {

"15069984211300461000": {

"first_seen": 20,

"time_present": 3600

},

"1506998428100461000": {

"first_seen": 22,

"time_present": 20

}

}

}

}

}

},

"objects": [

{

"attributes": [],

"probability": -0.10000000149011612,

"class_id": 0,

"rect": {

"top": 236.29208374023438,

"height": 15.62222957611084,

"left": 339.904052734375,

"width": 31.91175079345703

},

"id": 15069984211300461000,

"label": "car"

},

{

"attributes": [],

"probability": -0.10000000149011612,

"class_id": 0,

"rect": {

"top": 250.60275268554688,

"height": 19.088224411010742,

"left": 235.5812530517578,

"width": 35.78194046020508

},

"id": 1506998428100461000,

"label": "car"

},

],

}

}Extracting Metadata

Other nodes, such as Save Clip Node , Save Snapshot Node , Webhook Node let you save/send the metadata to external systems.

You can also add, extract or process metadata using the Function Node.

To log or display metadata on the video, use the Display Stream Info Node

Trigger Conditions

See also : Trigger Conditions Guide

Metadata can be utilized to trigger actions in some nodes. For instance, Save Clip Node can start a clip capture when some event happens.

These trigger conditions are written in a Dot-notation format, operating on top level properties in the metadata. They support the following operators:

- Comparison operators on numeric and string properties :

>, <, >=, <=, =, !=. ex.KEYPATH = "string"orKEYPATH > number - Booleans :

KEY_PATH - Combining conditions :

CONDITION and CONDITION,CONDITION or CONDITION

In the metadata example above, one could use the following condition:

nodes.annotate_presence1.rois.roi1.total_objects > 0 : Trigger anytime there are non zero objects in a region

usermetadata.value : Checks a boolean

Tip: If you need complex logic for the trigger, it is often easier to put that logic into a Function Node and add custom metadata to the frame :

if <complex_logic>:

frame.meta().set_field('usermetadata', {'value': True})

else:

frame.meta().set_field('usermetadata', {'value': False})

frame.meta().save().. which you can then use as a trigger in this node (ex. usermetadata.value)

Node Reference

See the Node Reference section for details about each node.

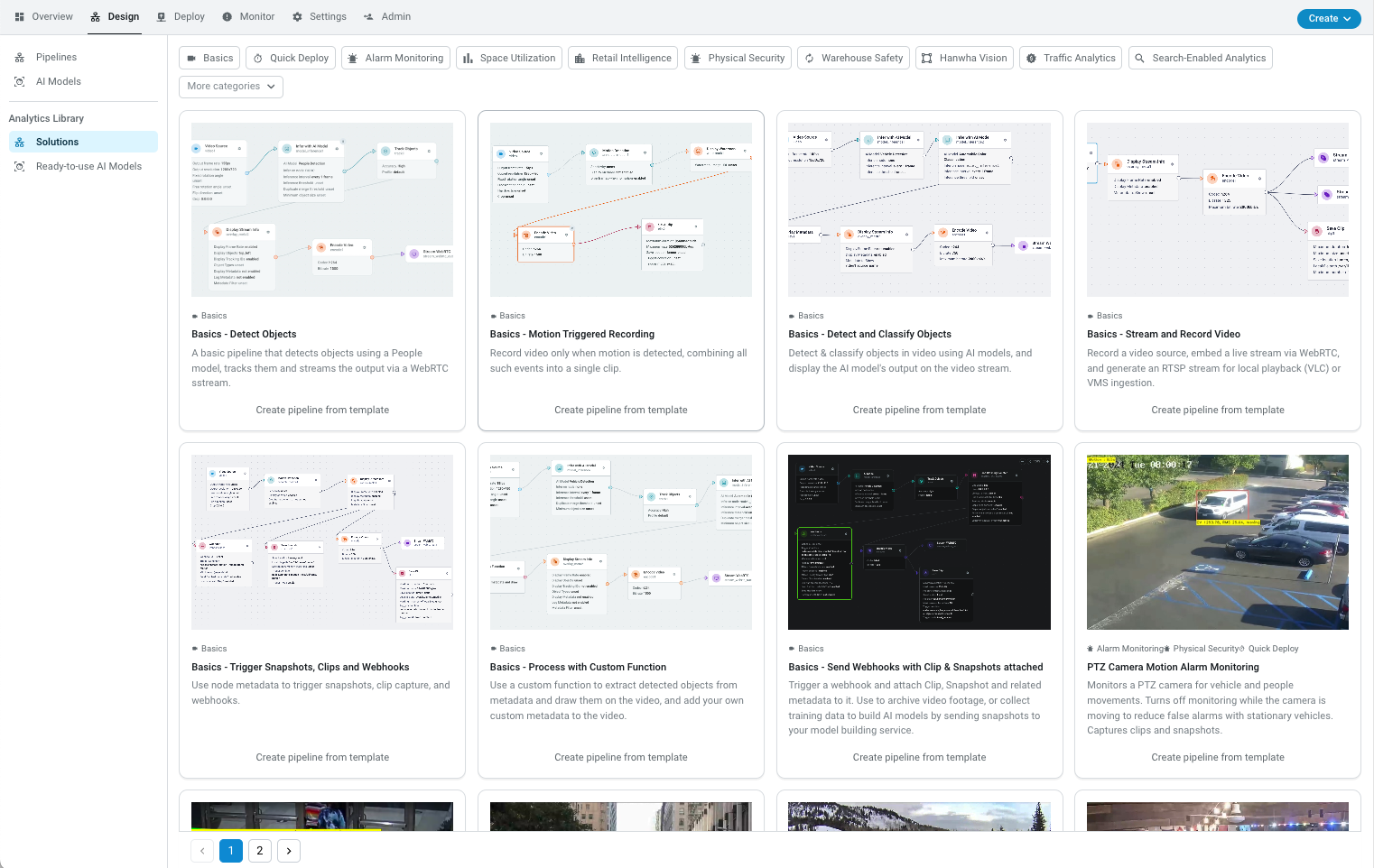

Workflow Templates

You can find an ever growing list of ready-to-use workflow templates in the Analytics Library, under Solutions.

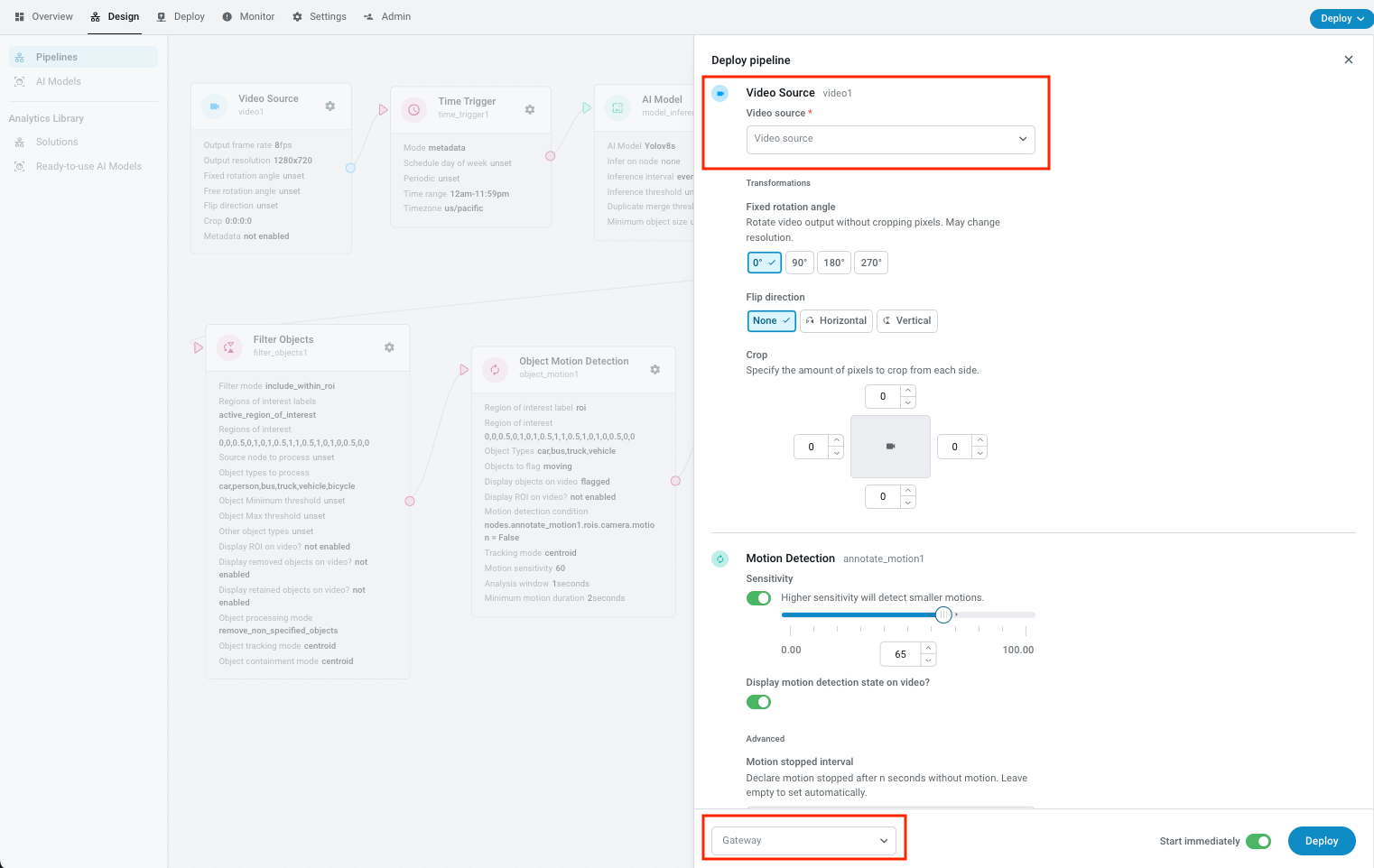

Workflow Deployments

To run the Workflow, you must deploy it with a specific video source on a Gateway.

See below for quick steps to deploy the Workflow from the Workflow editor, and then head over to Deployments page to learn more about managing deployments.

Deploy from Workflow Editor

You can create a Deployment using the Deploy button in the Workflow editor, or "Deploy workflow" button from the Workflow Deployments page. Deploying from the Workflow editor is particularly helpful when building or tweaking a specific analytic.

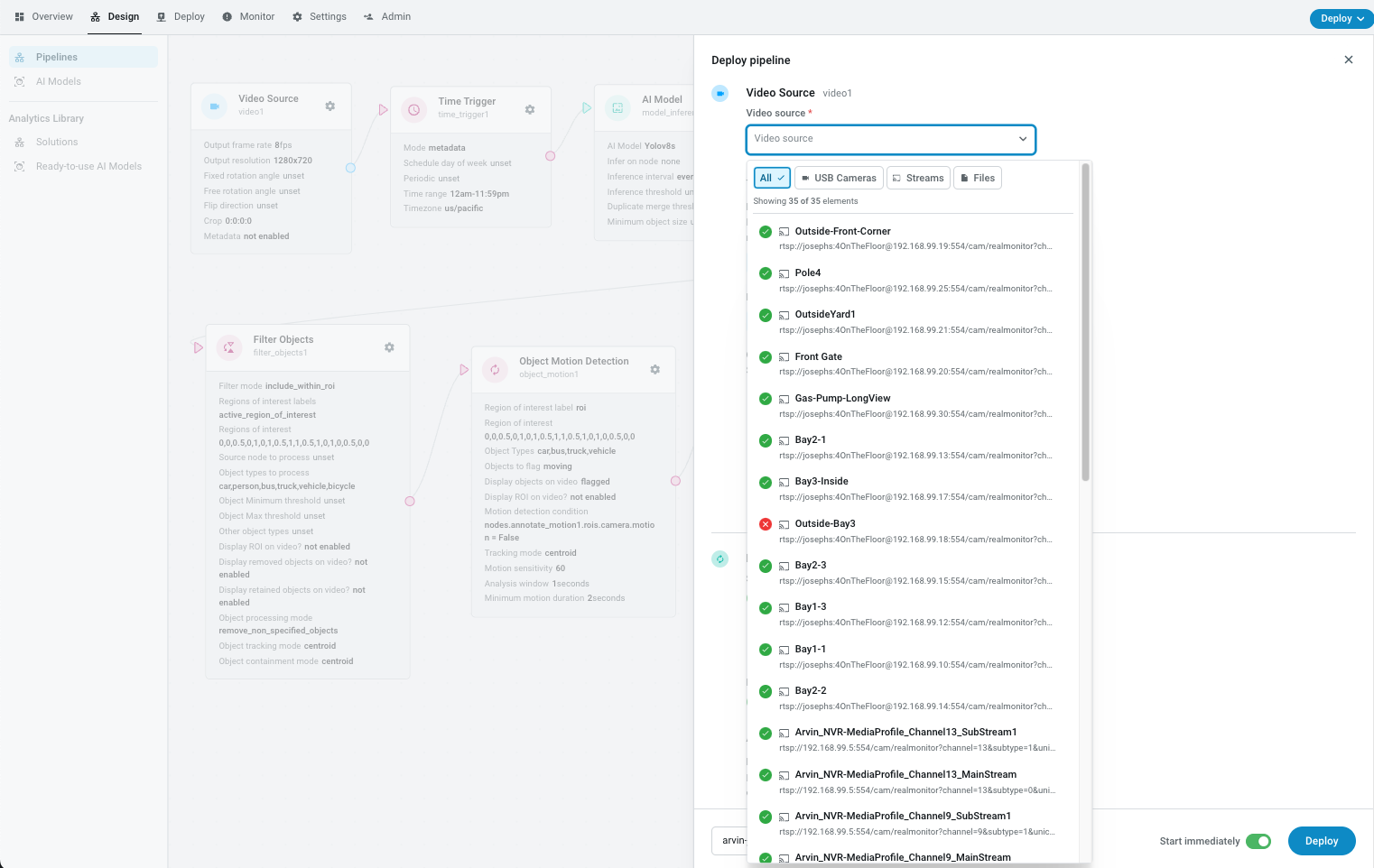

As a part of the deployment process, you will select the source for Video Source nodes, pick a Gateway, and customize required node properties.

Deploy a workflow. Selecting a Gateway first will limit sources to ones accessible by that gateway.

Select a video source. Selecting a source will limit Gateway choices to ones which can process this source.

Update a deployment

If you are updating the workflow, you can use the Update deployments button from the Workflow Editor to update existing deployments vs creating a new one every time. This is very helpful when you are building or debugging a Workflow.

API Reference

See pipelines

Updated about 1 month ago