Publish to BigQuery

Publish metrics to Google BigQuery for charting and visualization.

Overview

The Publish to BigQuery node allows for publishing metrics to Google BigQuery for charting and visualization. This integration is useful for applications requiring detailed data analysis and reporting, enhancing the capabilities of data-driven decision-making processes.

Properties

| Property | Description | Type | Default | Required |

|---|---|---|---|---|

| bigquery_project_id | BigQuery project ID | string | null | Yes |

| bigquery_dataset_id | BigQuery dataset ID | string | null | Yes |

| bigquery_credentials | BigQuery credentials | string | null | Yes |

| node_ids_to_publish | Node metadata to publish | node | null | Yes |

| trigger | Publish node metadata only when this condition evaluates to true | trigger-condition | null | No |

| objects_to_publish | Publish object metadata depending on object's properties meeting the specified criteria. Options: Specified by node, Specified by metadata pattern, Specific types | enum | null | Yes |

| object_match_patterns | Publish object metadata for objects specified by this pattern. Conditional on objects_to_publish being pattern_matched_objects | metadata-list | null | No |

| object_types | Object types to publish. Leave blank to process all. Conditional on objects_to_publish being type_matched_objects | model-label | null | No |

| event_type | Metrics event type to publish. This can be used to filter or count specific events in the dashboard. | string | null | No |

| event_trigger | Log a metrics event when this condition evaluates to true. | trigger-condition | null | No |

| metrics_tag | Tag published metrics with this tag to make it easier to filter them in the Dashboard. | string | null | No |

| objects_only_new_updated | If true, only publish objects when first seen. If false, publish both new and updated objects | bool | true | Yes |

| object_track_lifespan | Number of frames for which an object is tracked to prevent duplicate reporting when the new/updated option is set. Unit: frames | number | 600 | Yes |

| interval | Min. time between consecutive publish actions. Unit: seconds | float | 1 | Yes |

| use_stream_clock | Use timestamps from stream? | bool | false | Yes |

BigQuery Project & Dataset ID

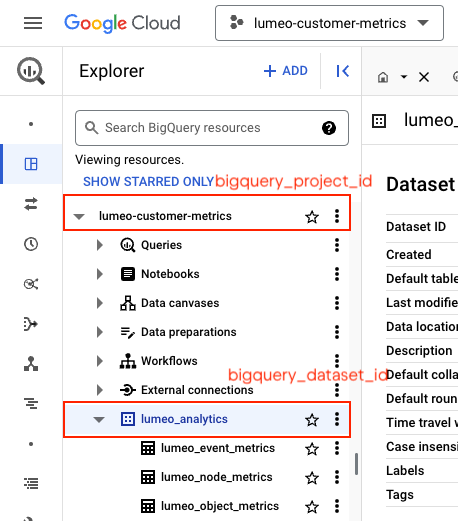

This node will create tables within the specified BigQuery project and dataset. Specify their id's as shown in the image.

BigQuery Credentials

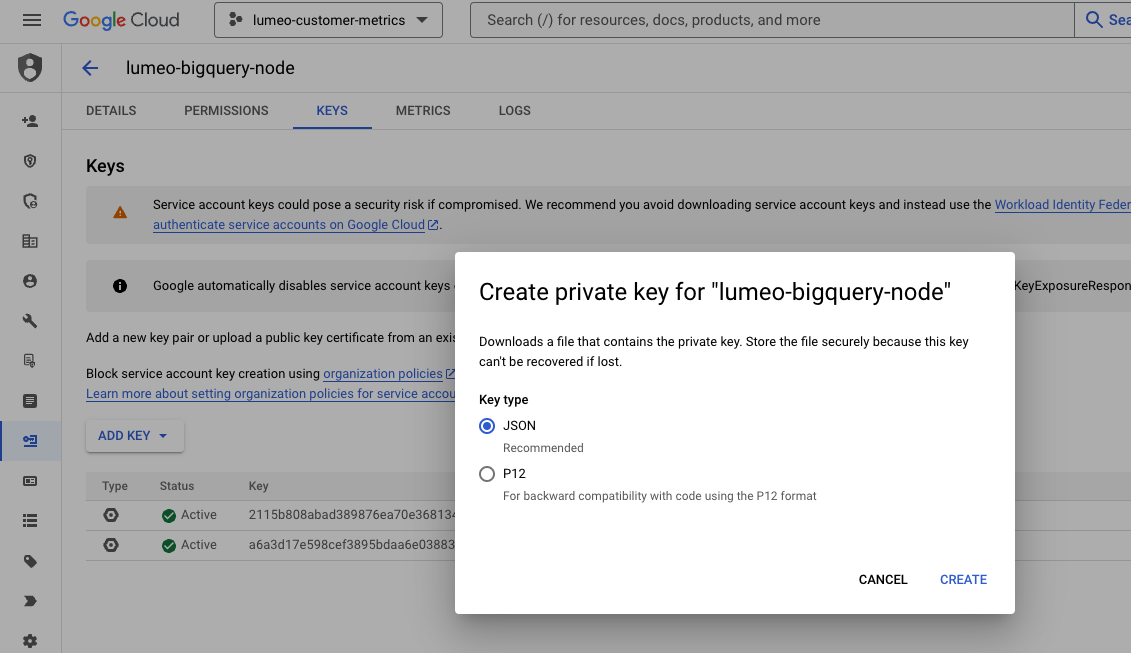

The bigquery_credentials field expects JSON format private key for a service account which has BigQuery Data Editor role for your BigQuery project and dataset.

You can create one as follows:

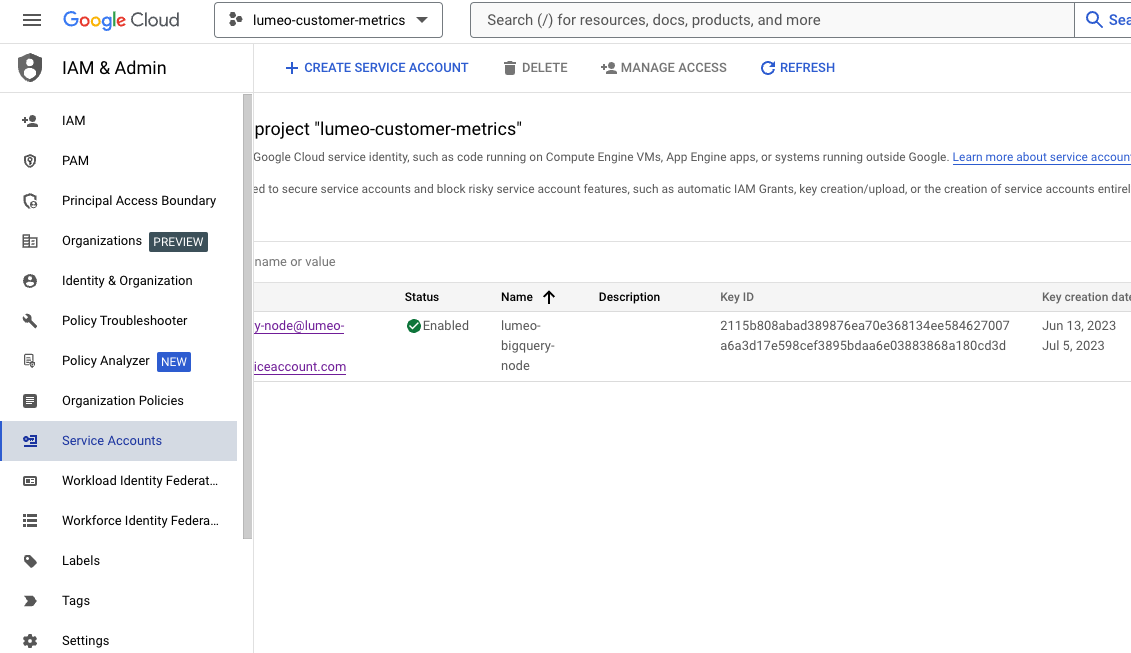

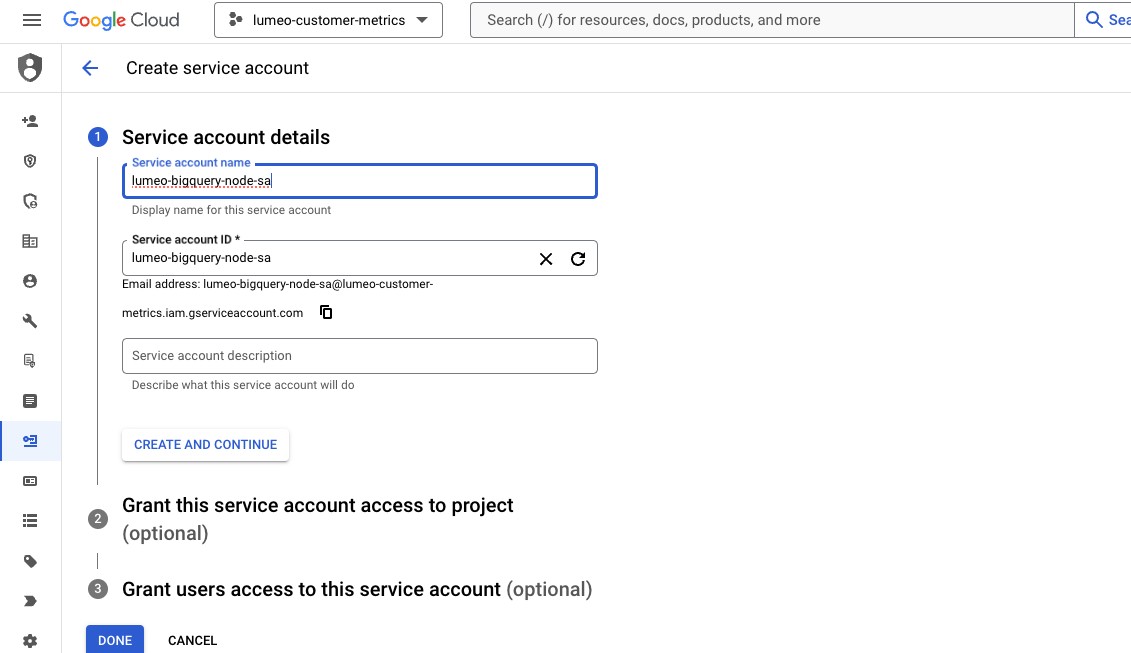

- Start at Google cloud console -> IAM & Admin -> Service Accounts. Create Service Account.

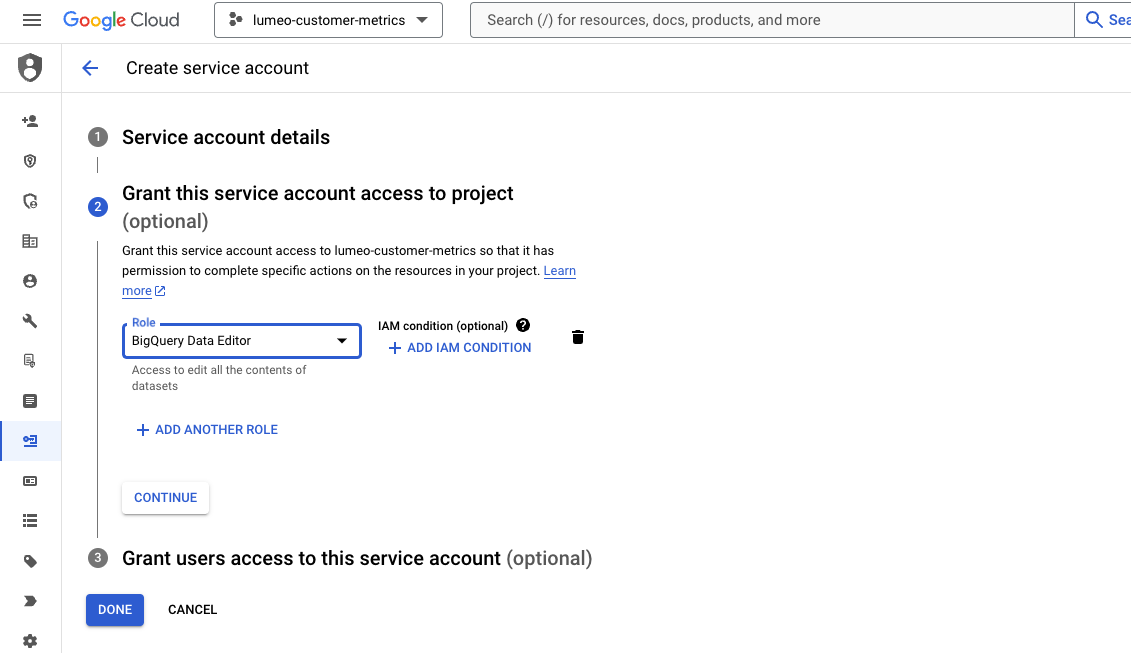

- Configure Service account with BigQuery Data Editor role.

- Generate a JSON private key for the service account

- Download and use the key as contents in the

bigquery_credentialsfield.

Updated 4 months ago