Camera AI Models

Follow this guide to use a camera's built in AI models with Lumeo

Background

Many AI-enabled cameras provide built in object detection capabilities. Lumeo can extract and use object detections and tracking IDs from camera's built-in AI models instead of having to run AI models on a Lumeo Gateway. This can be beneficial because:

- It lets you use models that may be available on the camera but not in Lumeo

- It lets you offload AI model inferencing to the camera, and hence lets you process more streams on the Gateway.

Importing Camera AI model metadata is only supported on Lumeo Gateway version 2.4.23 or later.

Camera configuration

Lumeo can import AI model inference (object detections and tracking information) from cameras that publish such metadata in the RTSP stream using ONVIF Scene Description XML.

Lumeo only imports object detection, tracking id's and object classes at the moment. Importing Analytics rule metadata such as line crossings, etc. is not supported.

The process for enabling ONVIF analytics metadata in RTSP streams varies by camera model and brand, so check your camera's web interface or settings for more details. In general, you will need to follow these steps:

- Ensure your camera provides built-in AI models. Many AI-enabled commercial cameras do, while most consumer grade cameras that don't support ONVIF do not.

- Enable object detection analytics in the camera

- On some camera models, enable analytics metadata stream for the ONVIF profile

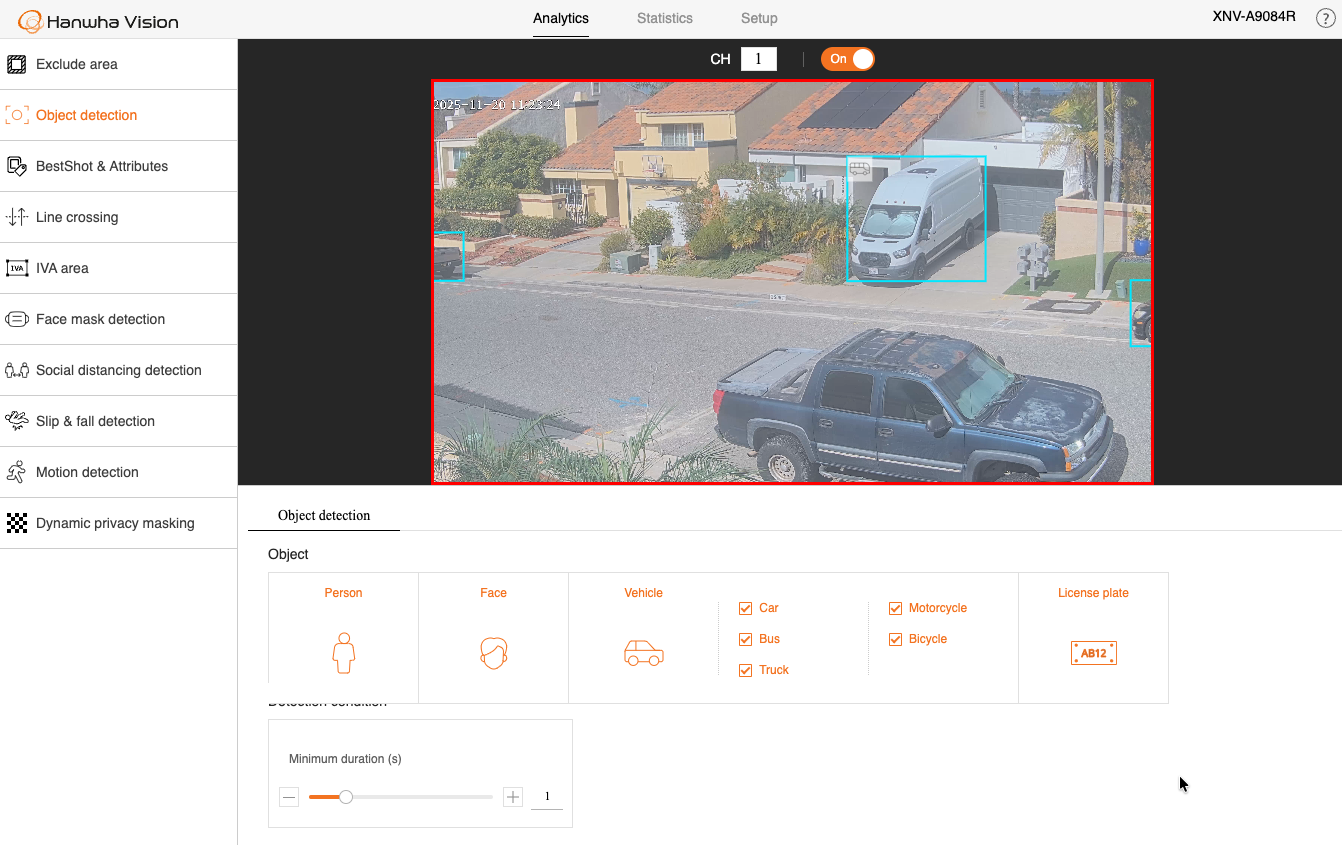

Hanwha Vision cameras

Enable Object detection and object types in WiseAI -> Object detection settings

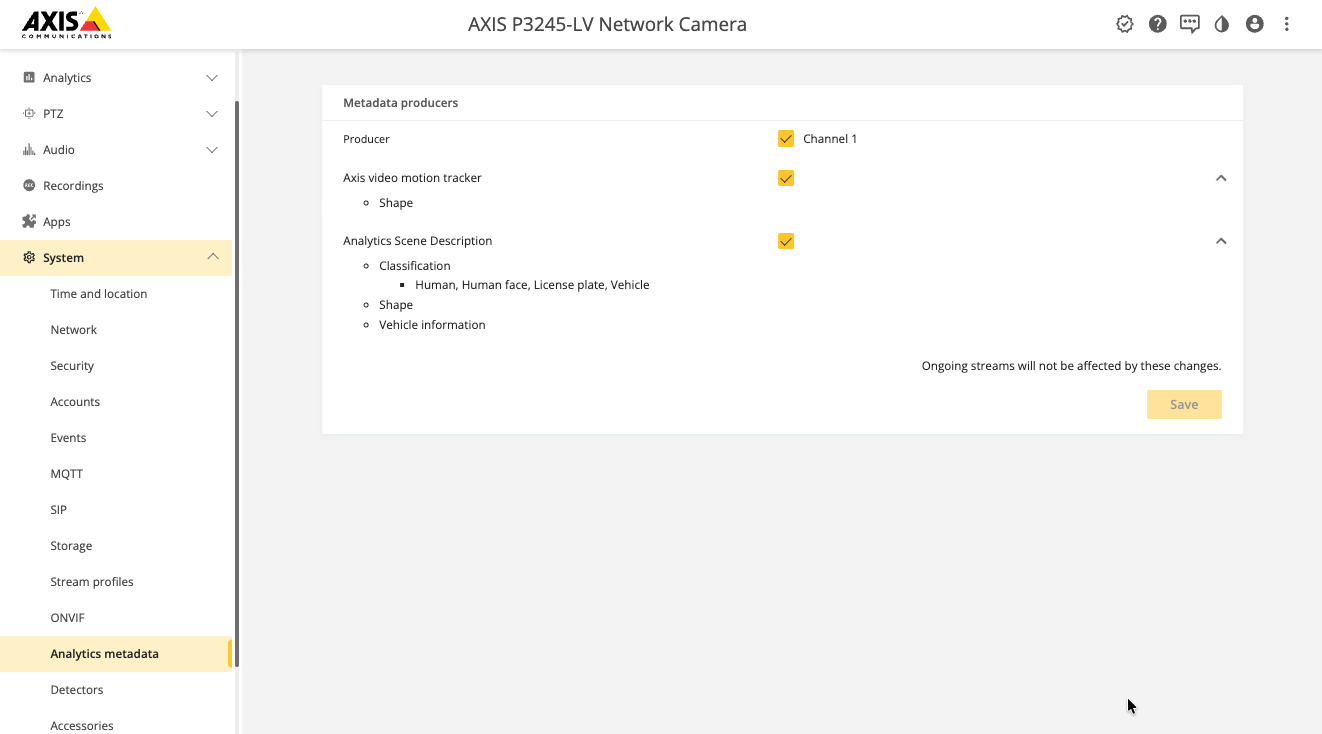

Axis cameras

- Enable Axis object analytics

- Configure object analytics app to detect specific objects

- Enable analytics metadata producer

- Configure ONVIF profile to add analytics metadata

Lumeo configuration

Ready-to-use Solution templates

Look for templates in the Solutions gallery under the "Camera Assist" category. These templates are designed to be used with cameras that have AI object detections enabled. You may need to adjust object labels in the template settings if your camera isn't using standard object labels.

Pipeline configuration

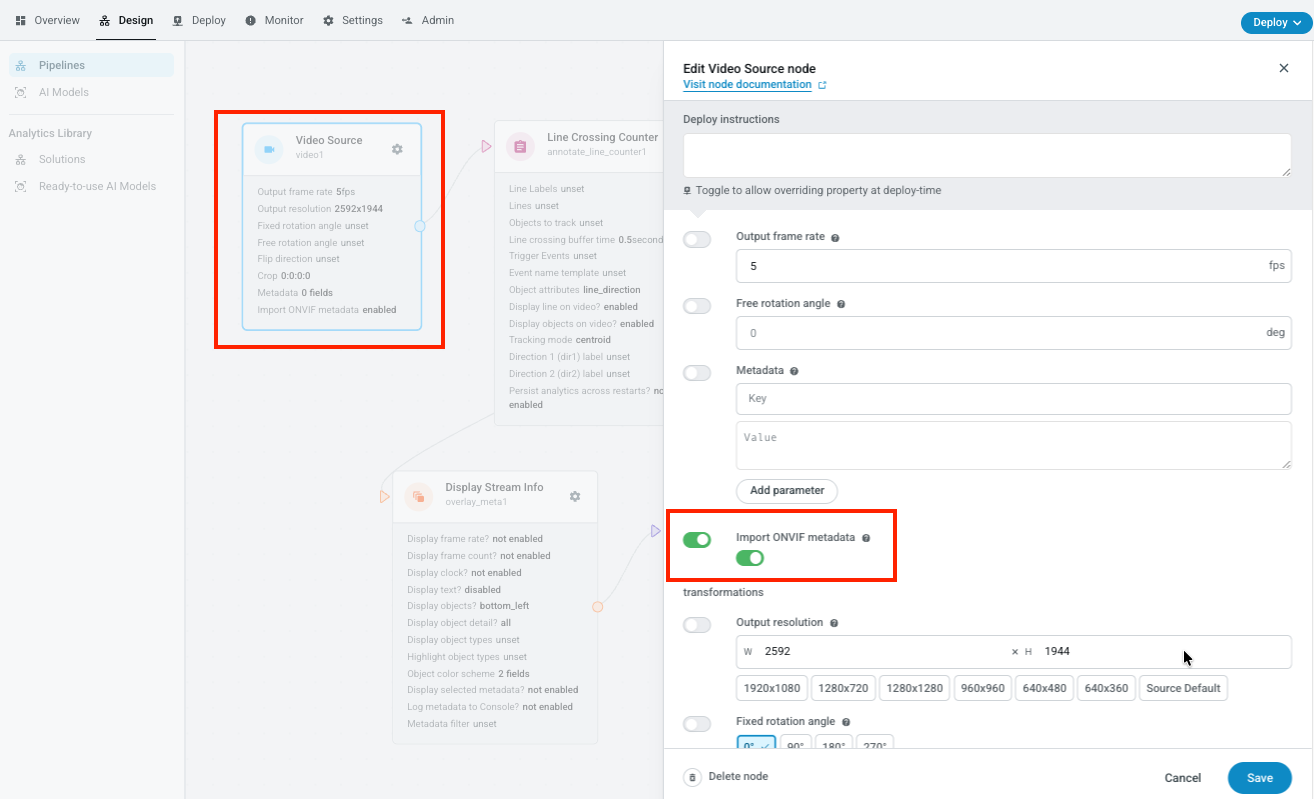

If you need to modify a pre-built Pipeline or template to use Camera AI models, here's how to do it:

- In the pipeline's Video Source node, enable "Import ONVIF metadata" option

Enable "Import ONVIF metadata" in Video source node settings

- Ensure there is no AI Model node that detects the same objects as the Camera AI models.

- Ensure there is a Track Objects node to fill in the gaps from onvif metadata.

- Connect the output of the Video Source node to the Track Objects node if there are no other AI models, and that to any of the other analytics nodes.

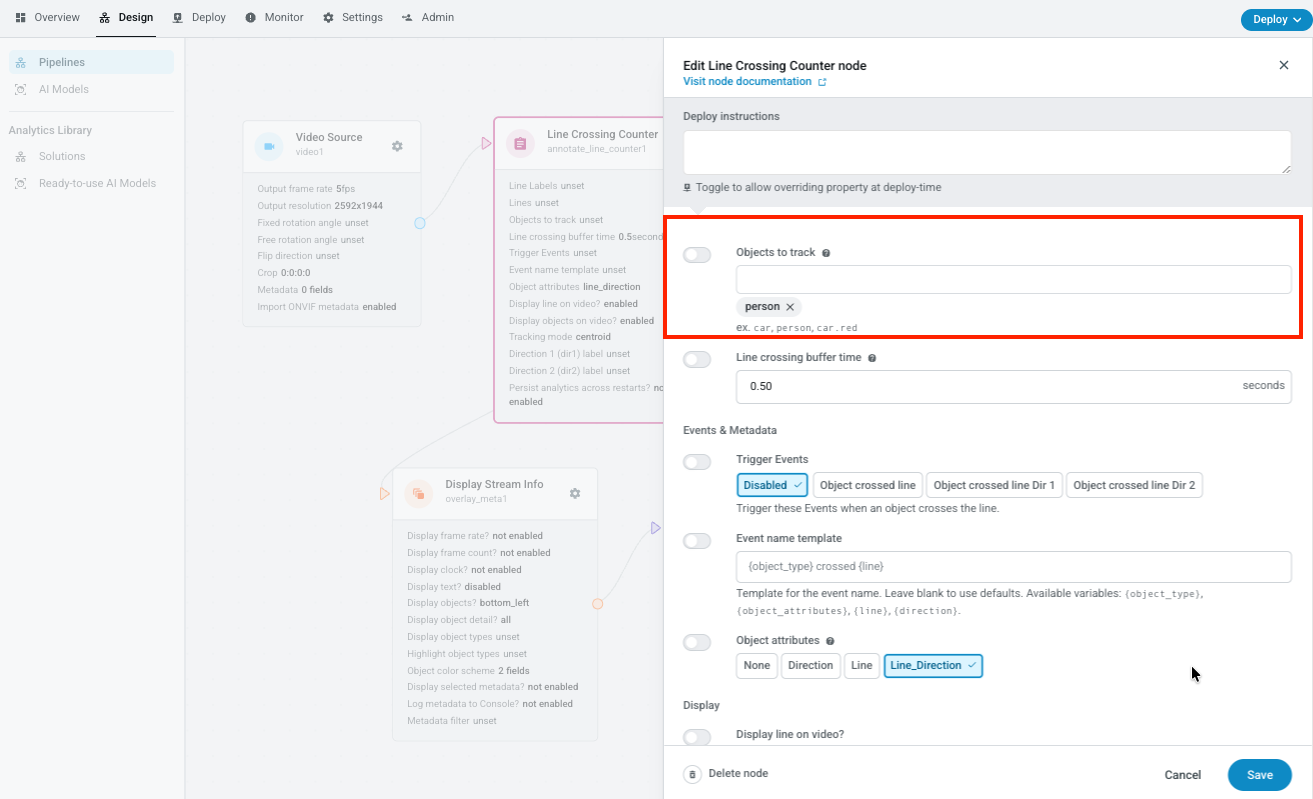

- Adjust object types in other nodes settings to specify the object labels that are output by the Camera AI model.

Adjust object labels in other nodes if needed, based on what the Camera detects

Updated 3 months ago